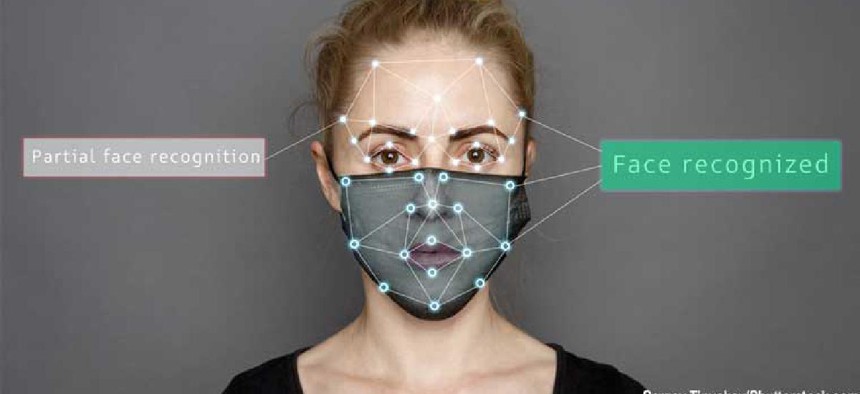

Technology getting better at recognizing masked subjects

Connecting state and local government leaders

According to the National Institute of Standards and Technology’s latest evaluation of vendors’ facial recognition algorithms, including those submitted after mid-March 2020, the software is becoming more adept at recognizing subjects wearing face masks.

Facial recognition algorithms are become more adept at recognizing subjects wearing face masks.

The National Institute of Standards and Technology’s Nov. 30 evaluation of vendors’ facial recognition algorithms includes those submitted after mid-March 2020, when more people were wearing masks to avoid contracting or spreading COVID-19. A July previous evaluation explored the effect of masked faces on algorithms submitted before March 2020 – none of which were designed to work with face masks. As expected, facial recognition software available before the pandemic often had trouble with masked faces.

NIST used same images it had tested in the July evaluation: 6.2 million images of 1 million people collected from U.S. travel and immigration datasets. The team evaluated the algorithms’ ability to perform “one-to-one” matching, in which a good-quality application or reference photo is compared with a new and different photo of the same person taken at the border to verify the subject’s identity. For this most recent test, as with the July report, the “probe” images taken at the border, which were generally of lesser quality, had mask shapes digitally applied, mimicking a scenario where a person wearing a mask attempts to authenticate against a prior visa or passport photo, NIST said in its report.

After testing 152 total algorithms– 65 of which were newly submitted – NIST found that all the algorithms submitted after the pandemic had increased false non-match rates when the probe images were masked. It was noteworthy, NIST said that they performed as well as they did given that 70% of the probe face was masked.

Some of the results were unsurprising: Masks that cover more of the face have greater false non-match rates, and wide-width masks generally give higher false negative rates than do rounder masks.

Oddly, mask color also seems to matter. NIST used white, light-blue, red and black digital masks, and many algorithms had more errors with black and red masks than they did with light-blue and white masks. “The reason for observed accuracy differences between mask color is unknown but is a point for consideration by impacted developers,” the report said.

A few of the algorithms performed well with any combination of masked or unmasked faces. Some vendors’ “mask-agnostic” software can handle images regardless the faces are masked or not without being told.

Issues not addressed by NIST’s evaluation include the fact that algorithms may improve over time, the report said. The evaluation’s use of photos with digital masking where the masks are of relatively uniform color, shape and texture, rather than using photos of subjects with a variety of masks, is also considered a limitation. Because the study focused on the impact of masks, it didn’t address demographic variations with the subjects. NIST said it will wait until it has more capable mask-enabled algorithms to research that aspect.

Finally, the NIST research team stressed that users of facial recognition systems must understand how their software performs in their own use cases, ideally testing its operational performance with real masks rather than the digital simulations used in the study.

“It is incumbent upon the system owners to know their algorithm and their data,” NIST’s Mei Ngan, one of the study’s authors, said. “It will usually be informative to specifically measure accuracy of the particular algorithm on the operational image data collected with actual masks.”