The tech behind NASA's Martian chronicles

Connecting state and local government leaders

After Curiosity landed, JPL had to retrieve images from 150 million miles away via a low-bandwidth space network and deliver them to a voracious public quickly and without crashing websites. How did NASA do it?

UPDATE: This article has been updated to correct a reference to Amazon's regions and Availability Zones.

Mars. The red planet. Similar to Earth in many ways, it may be the only planet within the solar system capable of sustaining life. Sitting 154 million miles away from Earth (on the day Curiosity landed), countless books, movies and TV shows have starred the planet, and little green Martians have become a wacky staple of American culture. Suffice it to say, humans have often dreamt of their celestial neighbor and longed to explore its surface. But it doesn’t give up its secrets easily.

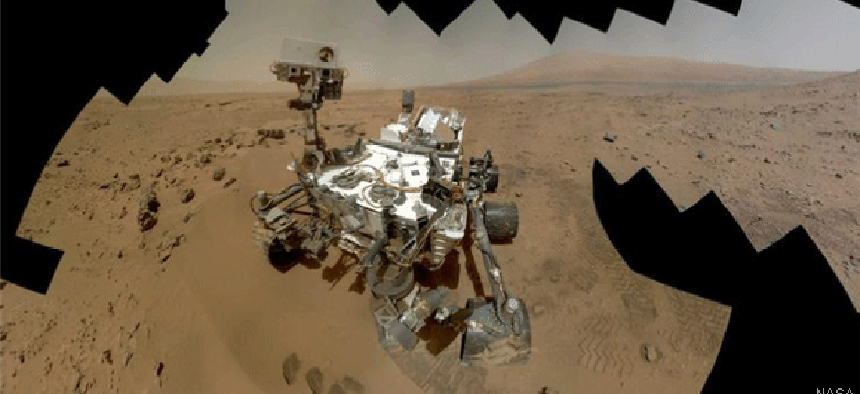

On Aug. 5, 2012, NASA dared to pierce its mysterious veil. The space agency had sent probes to Mars before, but never with an instrument as advanced as the minivan-sized Curiosity rover, and never using such a complicated delivery system. A unique sky crane transported the precious cargo down onto the red, rocky ground.

The world was watching, literally, and the manager of data services for tactical operations at NASA’s Jet Propulsion Laboratory, Khawaja Shams, and his team stood ready to broadcast one of the agency’s greatest successes — or perhaps most dismal failures — to millions of computers, tablets and smart phones around the globe.

To people quickly becoming accustomed to real-time streaming on the Web, seeing the images sent back from Curiosity might have seemed routine. But that belies the behind-the-scenes complexity of receiving signals over such a great distance via a low-bandwidth space network and making them instantly available to scientists and the public around the world — and dealing with heavy and fluctuating demand on bandwidth. Along with showing the world what Curiosity was finding, NASA was demonstrating the power of highly scalable, on-demand cloud computing.

For the operations team, the big events of Aug. 5 started with "seven minutes of terror" when Curiosity entered the Martian atmosphere. There was nothing NASA could do but hope that everything went according to the well-researched plan. Because it takes an average of 14 minutes to send a command or receive data back from Mars, if anything went wrong with the landing, NASA wouldn’t know until it was far too late.

But Shams and his team had been already working all day. NASA was broadcasting videos about the landings on NASA TV, featuring educators, scientists and celebrities talking about Mars and the pending landing. These grew more popular as the actual landing time approached and as people around the world visited the Curiosity and Mars Exploration Web pages to see how things were going.

Shams admits he was a little nervous. His heart was pounding despite feeling confident in his equipment. NASA had enlisted a whole suite of programs and servers from Amazon Web Services to ensure that everyone around the world could tune in without any danger of crashing the network. Capacity could be added or removed on the fly and, as it turned out, the system was even able to fix an emergency bandwidth overflow happening at a website that wasn’t even part of the original plan.

But before all that happened, Shams checked his control panel and saw that all of the AWS programs NASA was using were running in the green. Amazon CloudWatch was a go. CloudFormation was green. Simple Workflow Service was ready to go when called upon. Simple Storage Service (S3) and Elastic Compute Cloud (EC2) were already being used. Even Route 53 was ready to provide Domain Name System management.

“The Amazon Cloud solution can scale to handle over a terabyte per second but only requires us to provision and pay for exactly how much capacity we need,” Shams said. In addition to being a pay-as-you-need system, it was highly redundant, able to suffer the unlikely loss of over a dozen data centers without ever disrupting the flow of video, data and information to its worldwide audience.

The closer Curiosity got to Mars and its much-publicized rendezvous with the planet, the more people tuned in to monitor its status. As it entered the Marian atmosphere, Shams noticed that bandwidth usage had grown to over 40 gigabits/sec, according to the Amazon CloudWatch program. CloudWatch monitors usage within the Amazon Cloud and can send out warnings and messages when certain thresholds are met. Shams said he was impressed with CloudWatch because it can send out e-mails and text messages, making sure that everyone is alerted to any signs of trouble. It instantly springs to work in troublesome situations too, such as when any of the servers that make up the cloud begin to have I/O errors.

However, CloudWatch never actually sent out any warnings during the Mars landing, because the CloudFormation stack was designed to seamlessly add more bandwidth and servers when needed. In fact, Shams was able to click to add a new 25 gigabit/sec stack to the JPL cloud, and register those new machines to the project on the fly with the Route 53 program.

Back on Mars, Curiosity was hanging just a few meters above the surface of the planet, suspended in air by its rocket-powered sky crane. It would soon be dropped a few feet to the soil below, whereupon the crane would fly off and land a safe distance away, a move designed to prevent dust from kicking up around, and possibility damaging, the rover. Bandwidth usage worldwide was topping 70 gigabits/sec, when an unexpected crisis happened.

“The main JPL website, still running under traditional infrastructure, was crumbling under the load of millions of excited users,” Shams said. “We built the Mars site for the mission, but people were coming in through the main JPL site.”

Acting quickly, Shams directed the IT staff to change the DNS entries for the JPL main site to dump all of its traffic into the cloud. This process had to be done manually because it wasn’t part of the system, so Route 53 couldn’t be used. Even so, staff members at JPL were able to make the changes within five minutes. This could have caused a perfect storm of bandwidth problems for the cloud, with millions of users getting directed into the Amazon system all at once, joining millions of others who were already there and those who were tuning in late to see if the ship made it to Mars.

Bandwidth exceeded 100 gigabits/sec, but nobody was cut off. The cloud expanded to accommodate everyone.

Jamie Kinney, AWS solutions architect, said one reason it all went so smoothly for the millions of end users is because the Amazon CloudFront content distribution network ensures that every person around the world connects to the part of the cloud that is geographically the closest to him. Amazon has nine regions around the globe that are setup into Availability Zones. Each zone is independent and isolated from problems in the other areas. One region is located on the East Coast of the United States and two are on the West Coast. There are also Regions in Europe, Singapore, Tokyo, Brazil and Australia. “So someone in Japan is going to get their feed from the Japan-based Availability Zone,” Kinney said.

There is also a dedicated GovCloud, which is used only by the U.S. government and conforms to the objectives of U.S. International Traffic in Arms Regulations, which, with regard to data, requires storage in an environment that can be accessed only by designated U.S. users.

Once Curiosity landed, it began its mission of sending data back to Earth. NASA had an ambitious plan to share all data coming from Mars with government scientists and the public at large instantly and simultaneously. For that to happen, Shams had built a pipeline through space that he named Polyphony.

Curiosity transmitted its data, including its first self-portrait photos, in a series of highly compressed bursts of data. Data has to be highly compressed because the space network currently used is low-bandwidth only. Data goes from Curiosity to the Odyssey orbiting spacecraft and then is transmitted to one of three antennas on Earth, which are spaced 120 degrees apart in California, Spain and Australia so that one of the locations is always facing deep space. But even so, the deep space network isn’t always available to Curiosity, so when transmissions are sent in a burst, they have to be intercepted and processed quickly to make the most of that limitation.

Data arriving from Mars is automatically directed by Simple Workflow Services to the right place, in this case the Amazon EC2 servers. This part of the cloud activates and provides the muscle to transform the bits and bytes into actual photographs. Their job finished, they dump the data and shut back down. NASA has to pay only for the limited time the EC2 part of the cloud is actually processing the data, saving time and money.

Those images are then distributed to the various Availability Zones. Government users at NASA see the photos by accessing GovCloud. They use the data to look for interesting places to direct Curiosity to explore and to scan for potential hazards looming around the vehicle.

The public sees the images at the same time, accessing them through whatever zone is closest to their location. To everyone involved, the process was seamless, and images sent from Mars were available on tablets, smart phones, desktops and notebooks within seconds of arrival. Very few people know the complexity of the backend that made it all possible.

Shams stressed that NASA’s ability to be efficient both in terms of distributing data worldwide and in how it managed the costs of such an ambitious project did not happen by accident, or overnight. He credited JPL CTO Tom Soderstrom with laying the groundwork for getting the agency ready to use the cloud.

“To that end, he set up a cloud computing commodity board that is responsible for setting up evaluations, contracts and demos of the most promising cloud computing capabilities across multiple vendors,” Shams said. “This board is meant to streamline the process through which we adopt the most relevant cloud capabilities.”

And without the cloud, Shams said that none of what they did in terms of public outreach would be possible. He’s happy that NASA blazed a trail that other government agencies can now follow.

That night, NASA achieved two major milestones. The agency landed a robotic laboratory safely on a planet 154 million miles away, and it proved that efficient use of cloud computing could accomplish what might have seemed like a miracle just a few years ago.

On that count, Shams summed up the evening with a simple phrase that has come to symbolize many of the agency’s exploits into space: “Mission accomplished.”