Internet2 backbone serving national supercomputer network

Connecting state and local government leaders

The XSEDE research network operators say bandwidth and networking tools are key to moving large files between high-performance systems.

A national network of university supercomputers has migrated to the Internet2 8.8 terabit-per-second optical backbone, which offers advanced networking services as well as additional bandwidth for its high performance computing projects.

The Extreme Science and Engineering Discovery Environment (XSEDE) includes 17 universities and research facilities hosting high-performance computing and throughput machines, and advanced visualization, storage and gateway systems supporting more than 8,000 scientists.

The size of the pipes is important in enabling their research, said XSEDE project director John Towns of the University of Illinois. “Simple bandwidth availability will make a difference,” Towns said. “We’re already seeing that.”

But the task of moving increasingly large files between supercomputers requires new networking tools as well as big pipes. That is one of the reasons XSEDE chose Internet2 as its backbone provider, Towns said. “They were interested in partnering with us to develop the network services that our users need.”

Internet2 is a member-owned organization with more than 200 participating universities, labs and companies, providing advanced networking services to educational and research institutions. Last summer it finished building out a 15,000-mile core network offering 8.8 terabits/sec in 88 bundled 100-gigabit channels.

XSEDE is an umbrella organization funded by a five-year, $121 million National Science Foundation grant to provide an enhanced environment for high-performance computing that is becoming more critical to “big science” projects. Projects require not only the computing power to do complex simulations and analytics, but also involve large data sets both as input and output.

XSEDE members

XSEDE is led by the University of Illinois' National Center for Supercomputing Applications. Members include:

With an upfront cost of $30 million or more for a supercomputer, plus $1 to $2 million a year for power as well as dedicated environment and staff, these systems are hosted in only a handful of institutions, Towns said. Each has its own strengths and characteristics, and researchers often need to use separate systems to prepare data for input, run simulations or modeling, and analyze the results. In the past, data often was moved from one facility to another by hand. High-speed testbed and research networks now provide a way to move the data more efficiently.

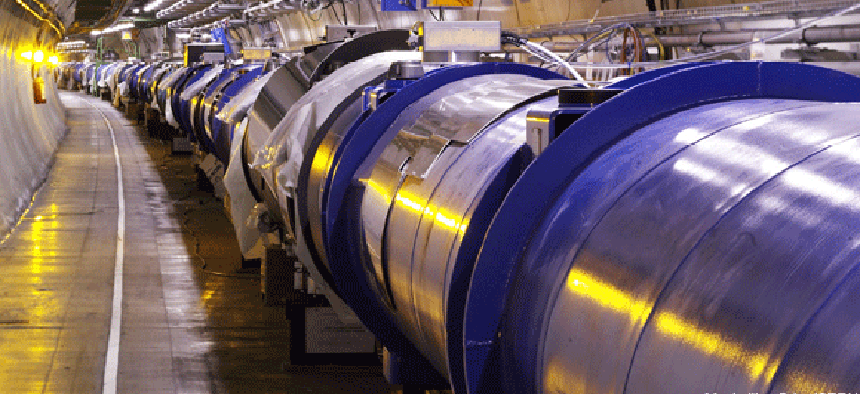

XSEDE had a backbone contract with National LambdaRail, a university-owned high-speed national research and education network whose users include U.S. laboratories participating in Large Hadron Collider projects. When that contract expired it moved to Internet2 to take advantage of research and development of services supporting bandwidth-intensive applications, such as the XSEDE-wide File System for handling large files.

Commercial carriers are deploying 100-gig networks, but “the way Internet2 deploys 100G is different,” said Rob Vietzke, Internet2’s vice president of network services. The bandwidth is available to handle the high-performance needs of a relatively few users rather than for aggregating a large number of small users. This means there are tens of gigabits of throughput available for bursts of traffic.

The 17-member XSEDE institutions link to Internet2 through the nearest of the network’s 21 Advanced Layer 2 Service nodes. Most have 10G connections, although two schools, Indiana University and Purdue University, have 100G connections.

“It’s just a matter of time and economics,” Vietzke said of the larger connections for the two Hoosier schools. “Indiana was an early investor in 100G. They were in the forefront.”