New techniques behind Energy's plan for exascale computing

Connecting state and local government leaders

Scientists at the Energy Department's Los Alamos National Lab are working toward building an exascale computer that by 2020 could be powerful enough to model the human brain, cell by cell.

From the outside, it may look like several countries are in a neck-and-neck race to produce the fastest supercomputer, but the truth, at least for the United States, is that supercomputer growth is driven by application needs. And it’s an ongoing process.

Los Alamos supercomputer game plan

1. Expand the current design in scale.

2. Put more multicore CPUs in place.

3. Program compilers to best use the homogeneous architecture for any program.

4. Fix the I/O bottleneck with heterogeneous flash and traditional disk-based storage.

5. Cool off the latest creation without using too much power.

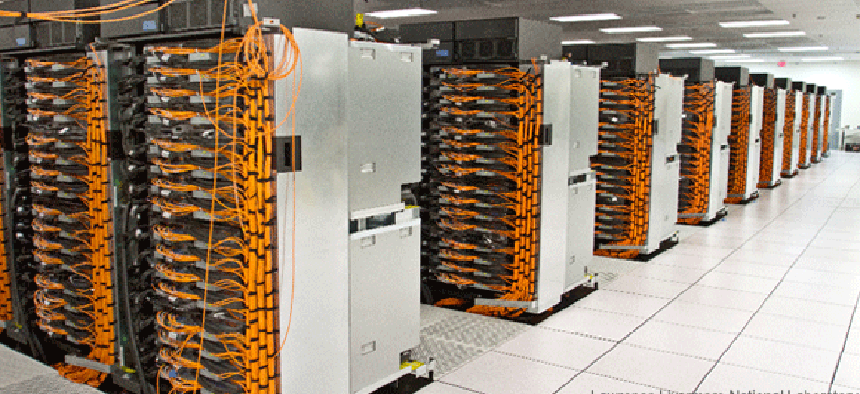

Titan, based at The Energy Department’s Oak Ridge National Laboratory, is capable of 20 petaflops, which is 20,000 trillion calculations per second. That is slightly faster than the Energy Department’s other big supercomputer, Sequoia, an IBM BlueGene/Q system based at Lawrence Livermore National Laboratory, which has a sustained speed of 16.32 petaflops.

But the goal, expected to be reached by 2018 or 2020, is an exaflop, which is 1,000 petaflops.

“To use a real life example, in 2005 a top supercomputer could handle a single cell model,” said Percy Tzelnic, senior vice president of the Fast Data Group for EMC. “In 2008, it could handle a cellular neocortical column (10,000 cells). In 2011, [it could model] a cellular mesocircuit (1 million cells). In 2014, a cellular rat brain model will be possible (100 million cells). And soon after, with exascale say in 2020, the ability to model a full cellular human brain may be possible. (100 billion cells.)”

Methodical design strategy

It seems impossible, yet scientists working on the project are confident that it will happen due to the methodical way they approach supercomputing design.

Gary Grider, acting leader of the High Performance Computing Group at Los Alamos National Laboratory, says that similar leaps have been made in the past. “We saw this type of jump in the ‘90s with the ASCI [Accelerated Strategic Computing Initiative] program to get us from gigaflops to teraflops,” he said, “So it’s not unheard of.”

Titan, which was built on the shell of the supercomputer Jaguar, works because of an ingenious design that combined CPUs and GPUs (graphical processing units) into one computing architecture. “In traditional CPU computing, you use up a lot of resources powering up a lot of things you don’t use,” Grider said. “By using GPUs instead, which are little SIMD [single-instruction, multiple-data] engines, you get what is essentially an array vector processor, and science is mostly about multiplying vectors.”

Grider said CPUs have hard-wired functions to make them more efficient in desktop and workstation environments, like a square root processor, even though they are not often used. When multiplied thousands of times, that means a lot of computing power is going to waste in a typical supercomputer setup that only contains CPUs.

GPUs by comparison are mostly blank slates that can take advantage of data parallelism, first demonstrated by the IBM Roadrunner supercomputer at Los Alamos in 2008. By combining CPUs and GPUs, the CPUs can help to drive the tasks of the GPUs and can also be called on when needed. It’s the best of both worlds that makes it all work.

However, that’s not enough to get anywhere close to exaflop computing, Grider said. To some extent you can build upon Titan, scaling it out and adding more nodes, but Grider estimated that path would get at most a three-fold performance increase. By adding more multicore processors, he thinks performance could be advanced a bit further, perhaps a tenfold increase.

Deconstructing the supercomputer

But getting to the next great hurdle in supercomputing power will require some new thinking and new architecture, and Energy is funding five projects to look at all aspects of supercomputing, from processing to storage, software to cooling.

EMC's Tzelnic is working on those new technologies that will drive future supercomputers. He said one secret to faster computing may not be the hardware, but the software that drives it.

“The heterogeneous architecture adds major complexity; the programmer has to identify regions of the code that are amenable to GPU acceleration, which is great for SIMD computing but not so great for general purpose computing. And the programmer could run all of the code on the GPU, but that might actually cause slowdowns,” Tzelnic said.

“For one thing, there is a bandwidth limit between the general purpose CPUs, where the main code threads run, and the GPU accelerators connected to each CPU. And sending a block of code to be accelerated is not free,” Tzelnic explained. “If the cost of sending it is greater than the benefit of acceleration, then the programmer would have been better off executing that block of code on the general purpose CPU,” he said. “The compilers haven't really caught up yet, so the programmer must determine which blocks of code are most amenable to GPU acceleration.”

In fact, a lot of the work for EMC concentrates on the I/O stack software, basically the storage system, which is a major bottleneck in Titan and other heterogeneous systems that will need to be resolved in the future.

“More and more disks became necessary, not because the amount of relevant data was increasing that fast, but because unlike processors, disks are not getting faster and faster. In fact, the disk bandwidth per bit tends to decrease.” Tzelnic said.

“Thus, labs have started to buy more disks for scaling bandwidth, but with wasted capacity,” Tzelnic explained. For exascale, “they couldn't just scale up the same homogenous software architecture anymore; they need a heterogeneous storage architecture of flash acceleration plus general purpose disks,” he said. “Putting the hardware together is relatively simple, but the software and the programming model, to ensure we extract all the performance, is massively challenging.”

So that is the current plan. Expand the current design in scale. Put more multicore CPUs in place. Program compilers that can best use the homogeneous architecture for any program. Fix the I/O bottleneck with heterogeneous flash and traditional disk-based storage. And cool off the latest creation without using too much power.

The next great supercomputer scheduled to be built at Los Alamos should take advantage of all the techniques being created today. Named Trinity, Grider expects it to be about five times bigger than Titan and perform in the 50 to 100 petaflop range. The next computer after that should be even faster, until the lab hits exaflops in 2020.

Even if they don’t make it by 2020, Grider isn’t worried. He’s more concerned about the number of questions, especially in the area of weapons research, that can’t be answered today with Titan. As fast as it is, Grider said there are certain things it can’t do, either because the problem itself is too large to fit into memory, or running the program would take years of continuous computing time before getting an answer back from Titan.

Tzelnic also thinks that the 2020 goal of exascale computing is possible, though, like Grider, he is more concerned with solving problems than with reaching a benchmark.