IBM bows out of facial recognition market

Connecting state and local government leaders

The company announced that it will no longer sell general-purpose facial recognition or analysis software.

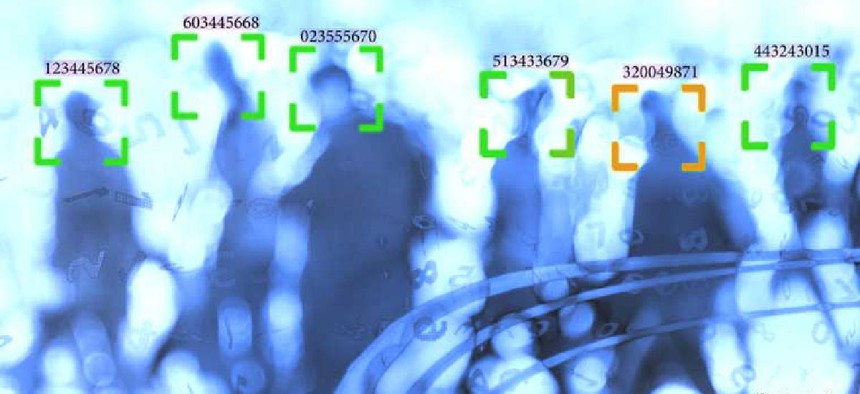

While acknowledging that artificial intelligence can help law enforcement agencies keep people safe, IBM has announced it will no longer sell AI-powered general-purpose facial recognition or analysis software. Two days later, Amazon said it would pause sales for a year.

In a June 8 letter to five lawmakers who have recently sponsored legislation calling for reform of policing, IBM CEO Arvind Krishna called for a responsible use of technology -- specifically, facial recognition technology used for “mass surveillance, racial profiling, violations of basic human rights and freedoms, or any purpose which is not consistent with our values and Principles of Trust and Transparency.”

He said the company would like to work with Congress in three key policy areas: police reform, broadening skills and educational opportunities.

Krishna advocated a national dialogue the use of facial recognition technology by domestic law enforcement agencies, noting that “vendors and users of Al systems have a shared responsibility to ensure that Al is tested for bias, particularly when used in law enforcement, and that such bias testing is audited and reported.” He also urged a national policy that encourages and advances technology that improves police transparency and accountability, such as body cameras and data analytics.

Many vendors besides IBM offer facial recognition software, including Amazon, Microsoft and Google, which hold the lion’s share of the market.

On June 10, Amazon announced a one-year moratorium on police use of its Rekognition facial recognition technology, saying it hoped the time would allow Congress “to implement appropriate rules.” The company said it would continue to allow organizations like International Center for Missing and Exploited Children to use the facial recognition software to help rescue human trafficking victims.

Some reports have noted that IBM was not heavily invested in computer vision and facial recognition technology and was unable to match the repositories of visual data held by its competitors.

The technology is also not uniformly mature. The National Institute of Standards and Technology’s December 2019 report found that the accuracy of software to identify people of different sexes, ages and racial backgrounds depends on the systems’ algorithms, the application that uses it and the data it’s fed. When it evaluated 189 software algorithms from 99 developers NIST found higher rates of false positives for Asian and African American faces relative to images of Caucasians and higher rates of false positives for African American females.

“Until the technology is 100%, I’m not interested in it,” Boston Police Commissioner William G. Gross told the Boston Herald. Boston is the latest city to ban the use of the technology, joining San Francisco, Oakland and Berkeley, Calif., and Somerville, Cambridge, Northampton, and Springfield and Brookline, Mass.