Super models

Energy puts its power to use on high-resolution modeling programs.

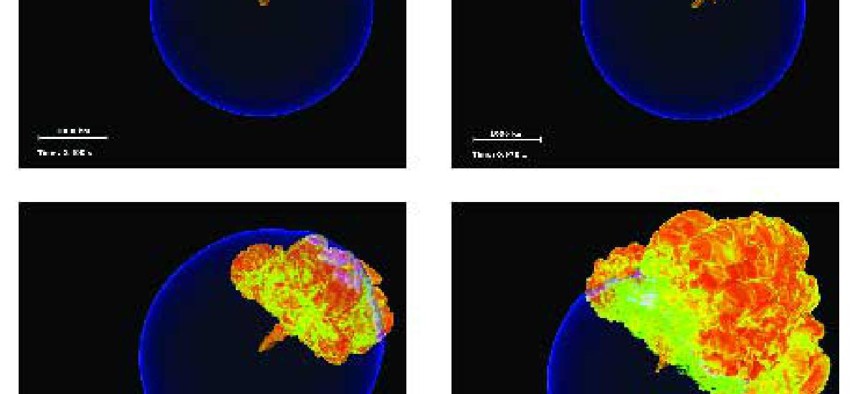

STAR OF THE SHOW: The Energy Department is lending 2.5 million hours of supercomputer time to University of Chicago researchers, who will use the time to model how a supernova explodes. This series of time-lapsed shots show how a stellar white dwarf detonates into a supernova, a process that will be replicated on computers at Lawrence Berkeley National Laboratory's National Energy Research Scientific Computation Center. Modeling such stellar explosions could help scientists learn more about dark energy, a theoretical but potentially powerful force in the universe.

That's nothing new for the department. The latest list, compiled last November, includes five DOE lab supercomputers in the top 10. And one being developed at Los Alamos (Page 23) could be even faster, with more than 32,000 processors.

What kinds of jobs require thousands of processors? Modeling complex physical phenomena, for one. Replicating complex events such as global weather as 2-D or 3-D models can provide insights otherwise not attainable in the laboratory, said Energy's undersecretary for science Raymond Orbach.

High-end computation 'gives us the ability to simulate things which cannot be done experimentally, or for which no theory exists,' Orbach said. And such simulations, Energy officials claim, will keep the country in the forefront of science as well as commercially competitive with the rest of the world. The race is to model events in as high a resolution as possible and make the models as holistic as possible.

The Sharper image

Last month, Energy's Office of Science, in conjunction with a private-industry consortium called the Council on Competitiveness, awarded computer time on its biggest iron to 45 projects submitted from academia and industry. The Innovative and Novel Computational Impact on Theory and Experiment program, or INCITE, received a total of 95 million hours of CPU time on some of the largest Energy machines. The winning proposals will address some of the most difficult problems faced by science and engineering, including deep-science work in accelerator physics, astrophysics, chemical sciences, climate research, engineering physics and environmental science.

These are big jobs. The average one will take a little over 2 million hours of CPU time, equivalent to about 42 days on a 2,000-processor machine or about 228 years on a single desktop computer.

On the commercial side, Boeing Co. will get 200,000 processor hours on Oak Ridge's Cray X1E to follow up work started in previous years. The company will use the time to study how wind flows around the wings of aircraft it is developing, which has typically required expensive wind tunnels and wing prototypes.

This kind of simulation can save the company untold money and time, said Michael Garrett, Boeing's director of airplane performance, during a press conference announcing the INCITE winners. Computational fluid dynamics 'allows us to do simulations upfront in the design process, in a level and fidelity we've never seen before.'

In 1980, the company built 77 prototype wings to test for its Boeing 767 aircraft; its new 787 aircraft required only 11, thanks to earlier work on DOE machines.

On the academic side, the University of Chicago will get the chance to model both the very, very small and the very, very large.

University of Chicago researcher Benoit Roux said his team plans to devote its allotted 4 million computational hours on an Oak Ridge Cray XT3 to model how the protein-based gates on cells work. Such gates are very selective in that they allow only certain elements to enter the cell.

'No one really knows how [they] move,' Roux said. 'Experiments will never help us visualize the working of the machine.' The gates, which are in a sense micro-machines, are of great interest to the pharmaceutical companies, which could use the knowledge to develop new drugs.

Researchers also will use processor hours to study how a supernova detonates. In essence, a supernova is the explosion of a star that has shrunk to the size of a small planet, explained University of Chicago researcher Robert Fisher. Two-dimensional models of these sorts of stellar explosions have been done before, which led to the discovery of dark energy, a still-unproven form of energy whose 'gravitational forces are repulsive rather than attractive,' Fisher said.

This year's winners have the need for greater fidelity in common. Most of the proposals stressed that the greater detail a model can achieve, the more insights researchers can get from their material. 'It's all about the granularity,' said Allen Badeau, chief science officer for IMTS, a contractor that supports government supercomputer systems and counts Energy among its customers.

As if achieving higher resolution weren't enough of a job, researchers now are beginning to merge multiple models. And combining two disparate models can lead to exponential increases in processing and memory requirements.

Oak Ridge is using this approach to advance plasma fusion theory, with funding from the agency's Scientific Discovery Through Advanced Computation program, an effort to bring supercomputing power to researchers. The work ultimately could provide a cheap and safe form of nuclear energy.

The Heat is on

The objective is to find a way to get plasma nuclei to meld together, which should produce energy as a result, said Don Batchelor, head of ORNL's plasma theory group. Researchers could try to smash plasma particles together one by one, but this requires expensive equipment and offers a very low probability of success.

Instead, the group is looking at ways to model the process of heating a bunch of plasma particles to 100 million degrees Celsius, making them more likely to overcome electrical resistance and meld.

Such a mass of plasma must be contained somehow, and the general approach has been to trap it in a doughnut-shaped magnetic field. This is where ORNL must make two models'one of the magnetic field and one of the plasma itself.

Fusing the models is not an easy task, Batchelor said. The two models operate on entirely different time scales. To model the magnetic field, the computer would have to calculate changes in picoseconds. But the rate at which the fusion reactions take place is best measured in seconds. So every single fusion model relies on 12 or more complete models of the magnetic field.

'So we have maybe 15 orders of magnitude difference in time to deal with,' Batchelor. 'But in order to understand the whole thing, you must couple it all together.'

That coupling has led to some enormous computing requirements. Batchelor has seen programs take as much as 600GB of working memory.

Nonetheless, federal agencies in general have been tackling this sort of 'coupled modeling' with increasing regularity over the past 10 years, noted Per Nyberg, Cray's director for its earth sciences segment.

Another example is modeling the Earth's temperature. A global model of the atmosphere can be linked with a global model of the ocean's temperature, and the two could affect each other's behavior in a feedback loop. In this approach, Nyberg said, 'We end up with a more accurate representation of the state of the Earth.'

'There are models of these individual things, but you can't predict what will happen until you put them all together,' Batchelor said. 'And that is a very difficult thing.'

NEXT STORY: Through a scanner quickly