NASA to test Google 3D mapping smartphones

Project Tango, Google's prototype 3D mapping smartphone, will be used by NASA to help the international space station with satellite servicing, vehicle assembly and formation flying spacecraft configurations.

Project Tango, Google’s prototype 3D mapping smartphone, will be used by NASA to help the international space station (ISS) with satellite servicing, vehicle assembly and formation flying spacecraft configurations.

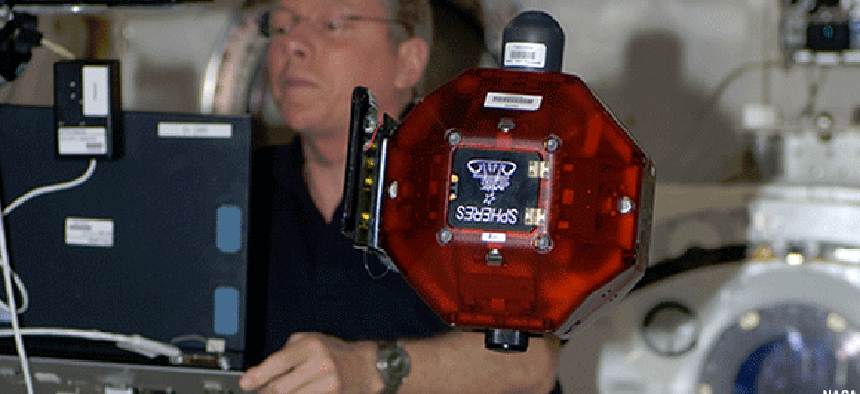

NASA’s SPHERES (Synchronized Position Hold, Engage, Reorient, Experimental Satellites) are free-flying bowling-ball-sized spherical satellites that will be used inside the ISS to test autonomous maneuvering. By connecting a smartphone to the SPHERES, the satellites get access to the phone’s built-in cameras to take pictures and video, sensors to help conduct inspections, computing units to make calculations and Wi-Fi connections to transfer data in real time to the computers aboard the space station and at mission control, according to NASA.

The prototype Tango phone includes an integrated custom 3D sensor, which means the device is capable of tracking its own position and orientation in real time as well as generating a full 3D model of the environment. “This allows the satellites to do a better job of flying around on the space station and understanding where exactly they are,” said Terry Fong, director of the Intelligent Robotics Group at Ames.

Google handed out 200 smartphone prototypes earlier this month to developers for testing the phone’s 3D mapping capabilities and developing apps to improve these capabilities.

The customized, prototype Android phones create 3D maps by tracking a user’s movement throughout the space. Sensors “allow the phone to make over a quarter million 3D measurements every second, updating its position and orientation in real-time combining that data into a single 3D model of the space around you,” Google said.

Mapping is done with four cameras in the phone, according to a post on Chromium. The phone has a standard 4MP color backside camera, a 180 degrees field-of-view (FOV) fisheye camera, a 3D depth camera shooting at 320×180@5Hz and a front-facing camera with a 120 degree FOV, which should have the same field of view as the human eye.

The cameras are using Movidius’ Myriad 1 vision processor platform. Previous visual sensor technology was prohibitively expensive and too much of a battery drain on the phones to be viable; new visual processors use considerably less power. Myriad 1 will allow the phone to do motion detection and tracking, depth mapping, recording and interpreting spatial and motion data for image-capture apps, games, navigation systems and mapping applications, according to TechCrunch.

The cameras, together with the processor, let the phone track and create a 3D map of its surroundings, opening up the possibility for easier indoor mapping, a problem facing urban military patrols and first responders.