A giant technical leap in speech recognition

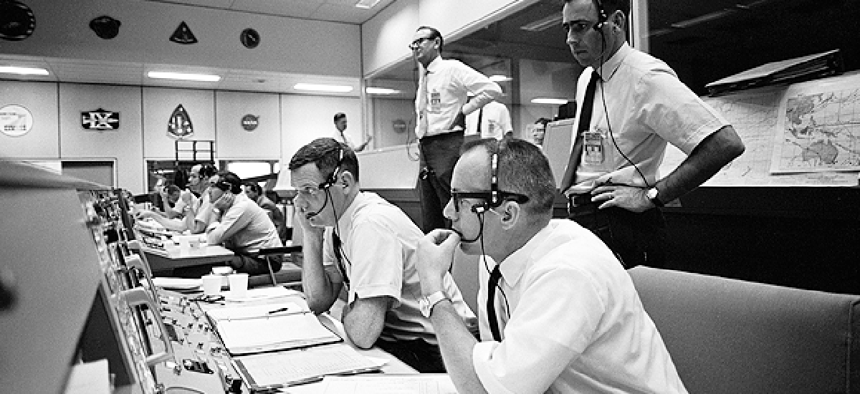

Researchers have digitized and transcribed thousands of hours of audio conversations between astronauts, mission-control specialists and back-room support staff during the Apollo moon missions.

Speech recognition software has gotten good enough that most large businesses now use it to route customer calls. Still, detecting whether a caller is saying “sales” or “customer service” is relatively simple compared to transcribing radio conversations of overlapping speakers who are 240,000 miles apart.

That was the challenge taken on by researchers at the University of Texas at Dallas when they received a National Science Foundation grant in 2012 to develop speech-processing technology to transcribe thousands of hours of audio conversations between astronauts, mission-control specialists and back-room support staff during the Apollo moon missions.

According to John Hansen, a professor of electrical and computer engineering and director of the Center for Robust Speech Systems at the university, the project involved much more than just developing speech-recognition software that could manage multiple speakers and variable sound quality. The first challenge was to digitize more than 200 reels of obsolete analog tapes.

The only way to play the reels of tape was using a 55-year-old-plus device called a SoundScriber. The main problem with SoundScriber was that it could only read one track at a time -- and the tapes had 30 tracks. According to Hansen, digitizing the tapes for Apollo 11 alone would have taken over 170 years if they could only read one track at a time. “One of the main engineering challenges we faced was designing a 30-track read head to read all 30 tracks at the same time,” he said.

After successfully building and installing a 30-track read head that cut the digitizing process down to a matter of months, the next challenge faced by the team -- led by Hansen and research scientist Abhijeet Sangwan -- was developing software to process the speech. Before actually transcribing speech, the team had to develop algorithms that could distinguish different voices on the recordings and generate what Hansen called “diarization,” a timeline of who is speaking when and with whom.

“Say we select a given hour,” Hansen said. “Over that hour how much time did each person talk? The flight director talks to Buzz Aldrin for, say, 18 minutes in that hour. Over that 18 minutes, the flight director initiates 80 percent of the conversations.”

The system developed by the UT team can also do sentiment analysis, though Hansen said they intentionally limit the amount of analysis they perform to tagging a particular bit of speech as positive, neutral or negative. “We did not want to look at the full emotion of the speaker,” said Hansen. “It may or may not be appropriate if you're labeling someone as angry or happy.” But rough estimates of sentiment, Hansen said, can be useful in understanding how people interact in stressful situations.

Finally, the team had to build a predictive lexicon for the actual speech recognition software, a particularly challenging task in light of the language in use at NASA.

During the moon missions, Hansen said, NASA had to execute all its command and control through voice communications. “That means they had to make the audio as compact and information bearing as possible,” he said. “For anything that would require multiple words, they would want to generate an acronym.”

The team turned to harvesting words from the web, from books and news reports and other documents related to the Apollo program. “We pulled 4.5 billion words,” Hansen said. Then the team analyzed word frequency -- how often words occurred and how often they were tied to other words, steps important for a speech-recognition engine to be able to make educated guesses about what a speaker said when audio distortion or overlaid conversation created doubt.

The team has processed all 19,000 hours of Apollo audio, which NASA will soon be making accessible to the public.

Hansen was quick to acknowledge that the resulting transcriptions are far from flawless. The word error rate can be anywhere from 5 percent when conditions are good to 85 percent when they are bad. “A lot depends on when someone goes off target, if they’re using words or discussing topics that are in our NASA lexicon,” he said.

Those error rates, however, aren’t as significant as they might seem. Hansen said the key benefit of even an error-laden transcript is that it is searchable. If in reading a transcript, users aren’t sure about something, he said, they can click on a link to be taken directly to that section of the underlying .WAV file.

In addition to NASA making the data accessible to the public, the UT team is sharing its speech-processing technology with the speech- and language-processing community, including companies such as Apple and Google, as well as academic researchers. “This also lets … large numbers of people work collaboratively together to make better tools to overcome multiple speakers, noise, people overlapping in talking to each other,” said Hansen.

NEXT STORY: First 5G standards approved