Simulator tests software for next-gen prosthetics

Connecting state and local government leaders

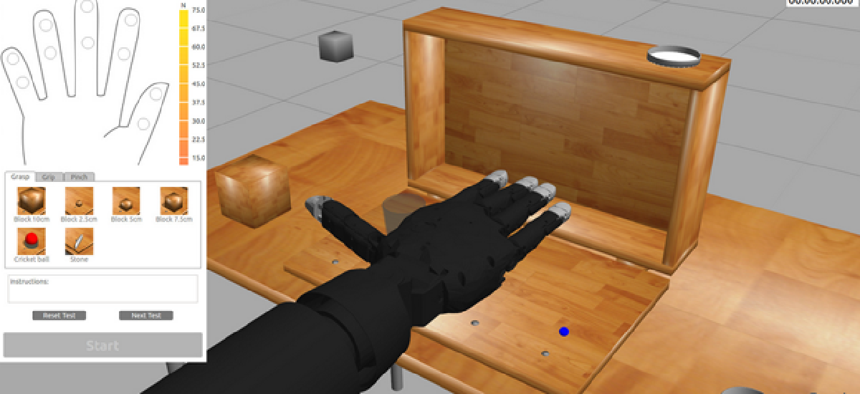

The Gazebo simulator is helping DARPA develop prosthetic limbs with intuitive controls and sensory feedback.

Veteran’s care is one area the Defense Department prioritizes – so much so that the DOD division tasked with the research and development of next-generation warfighting capabilities has been working to build better, more natural artificial limbs for amputee veterans.

DARPA’s Hand Proprioception and Touch Interfaces (HAPTIX) program aims to test and develop prosthetic limbs that are as functional as possible and even provide sensations of a natural hand.

Upper-limb amputees using prosthetic devices don’t get proprioceptive feedback – the information the devices send back to the brain – letting it know how a limb is moving. But without this feedback, even the most advanced prosthetic limbs will feel numb and usability will be impaired, according to DARPA.

HAPTIX aims to give amputees prosthetic limb systems that not only feel and function like natural limbs, but also receive rich sensory information.

The program plans to adapt one of the prosthetic limb systems developed recently under DARPA’s Revolutionizing Prosthetics program to incorporate interfaces that provide intuitive control and sensory feedback to users. These interfaces would build on advanced neural-interface technologies being developed through DARPA’s Reliable Neural-Interface Technology program, DARPA said.

The HAPTIX program partnered with the Open Source Robotics Foundation (OSRF) to use Gazebo to test its designs. Gazebo is an open source simulator that makes it possible to rapidly test algorithms, design robots and perform regression testing using realistic scenarios, OSRF said. Gazebo has previously provided simulation environments for DARPA’s Robotics Challenge.

The latest release of Gazebo supports the OptiTrack motion capture system for evaluating the devices’ motion in space; the NVIDIA 3D vision system; teleoperation options such as the Razer Hydr, a precision remote control equipped with a joystick, SpaceNavigator, a 3D mouse, mixer board and keyboard, a high dexterity prosthetic arm and programmatic control of the simulated from Linux, Windows and MATLAB.

“Our track record of success in simulation as part of the DARPA Robotics Challenge makes OSRF a natural partner for the HAPTIX program,” said John Hsu, chief scientist at OSRF in the foundation’s blog post. “Simulation of prosthetic hands and the accompanying GUI will significantly enhance the HAPTIX program’s ability to help restore more natural functionality to wounded service members.”

For those interested in testing or simulating, OSRF will provide a list of equipment and software essential for simulation. Essentials include the ability to run the Gazebo system, a Polhemus motion capture device, Nvidia stereo glasses and 3D monitor and a spacenav joystick.

NEXT STORY: DARPA makes strides in searching the ‘deep web’