Can your smartphone drive your car?

Connecting state and local government leaders

By adding a laser sensor to a smartphone, researchers at MIT created a low-cost device that can deliver the high-resolution distance sensing required for robotic navigation.

One of the biggest obstacles to making affordable mobile robots is the high cost of equipment for sensing and navigating the environment. The current go-to technology is lidar -- light detection and ranging -- which measures the time it takes rapid bursts from lasers to reflect back to the emitting device.

The downside of lidar is cost. The device used in Google’s self-driving vehicles, for example, is reported to cost $70,000. According to researchers at MIT, however, the job can be performed at a fraction of that price using an off-the-shelf smartphone with a $10 laser attachment.

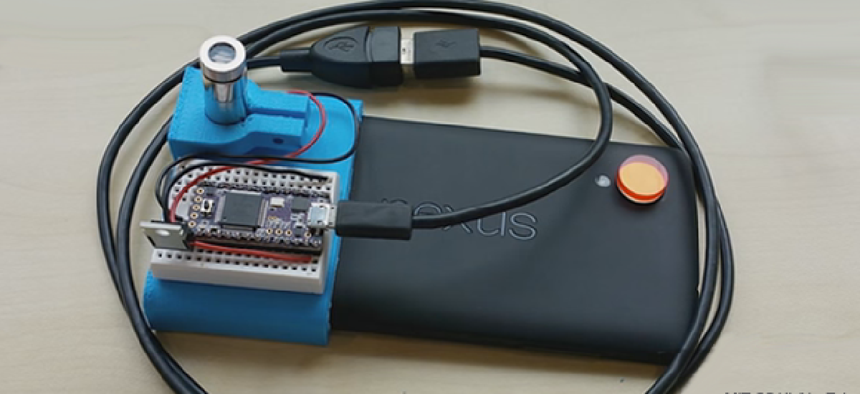

Called Smartphone LDS (laser distance sensor), the prototype developed by a team at MIT’s Computer Science and Artificial Intelligence Laboratory, uses the smartphone’s camera to record reflections of low-energy light bursts from the laser, which is mounted at the bottom of the phone. The position of objects is determined by computing where the light falls on the camera’s sensor.

The current prototype is fast and accurate enough to guide vehicles moving at speeds up to almost 10 miles per hour, according to Jason Gao, doctoral student and member of the Smartphone LDS team. In fact, at a range of 3 to 4 meters, the system gauges depth to an accuracy measured in millimeters, while at 5 meters, the accuracy declines to 6 centimeters.

Gao said the team believes it can improve the accuracy and resolution to levels sufficient for ordinary driving. “Perhaps it’s not an appropriate sensor for something like a race car,” Gao conceded. “Our system is really suited for a more mass-market class of applications where we expect to see a lot more slower-moving or medium-speed autonomous vehicles out there doing all sorts of tasks for humans.”

With advances in the quality of processors and smartphones, the team expects the capabilities of Smartphone LDS to improve rapidly as well.

“We actually are limited by the processing right now,” Gao said. The cameras on the current generation of smartphones are able to take higher-resolution images than can be handled by the existing processors in timely fashion, he explained. “Processing higher-resolution images would improve our angular resolution and our range resolution or accuracy as well.”

Gao also noted that the prototype device uses a phone with a 30-frame-per-second camera. Smartphones with 240-frame-per-second cameras are currently available, and use of those will further enhance the system. A faster shutter speed allows the use of higher-powered laser bursts, Gao said, and that will enhance ranging capabilities.

Smartphone LDS is in the process of being patented, and Gao said the team has begun receiving inquiries from the private sector. The team expects the technology to be used in diverse kinds of robots -- from self-driving vehicles to drones for package delivery, to robots used for picking up trash.

“Lidar has been a critical sensor for all classes of robotics that need to navigate the real world,” Gao said. “This is essentially providing the same kind of data as lidar but at much lower cost.”