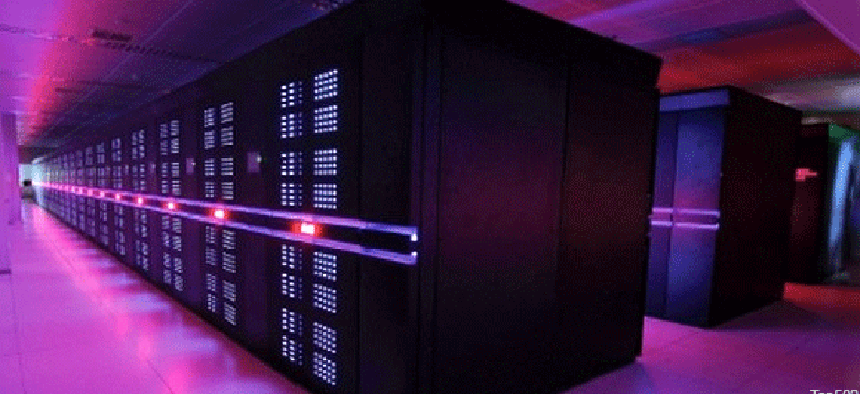

China’s Tianhe-2 takes supercomputing lead, but should US worry?

Connecting state and local government leaders

The country's new Tianhe-2 system leapfrogs the Energy Department’s Titan, but it's just one wrinkle in the move toward new techniques and, eventually, exascale computing.

China has recaptured the top spot in terms of supercomputer speed with its Tianhe-2 system, CNN reports. It's a blended system of chip types with CPUs and GPUs, as we have seen in most other new supercomputer designs. Tianhe-2, also called Milkyway-2, has 16,000 computing nodes, each made up of two Intel Ivy Bridge Xeon processors and three Xeon Phi chips for a total of 3,120,000 cores.

It was able to achieve 33.9 petaflops, almost double what the Energy Department's Oak Ridge Lab's Titan could achieve when it captured the No. 1 spot in November. (A petaflop is a quadrillion floating-point operations per second.)

While everyone wants to be No. 1, Gary Grider, acting leader of the High Performance Computing Group at Los Alamos National Laboratory, isn’t concerned about the speed race. The United States has a plan in place to be able to achieve exaflop (thousand petaflop) speeds by 2018 or 2020 and is already laying the groundwork for that to happen.

In fact, the Tianhe-2 system more or less follows the path that the United States is taking. For the most part, the Tianhe-2 is a much larger version of Titan, and it is getting better results because of having more cores.

According to Grider and others working in the supercomputing field, it’s more than just making systems bigger; improvements can be made in the software that uses the computers.

Percy Tzelnic, senior vice president of the Fast Data Group for EMC, which is helping to build supercomputers for the government, explained that most software can't take advantage of the combination of CPUs and GPUs, since programmers are still learning how to optimize it. "The heterogeneous architecture adds major complexity; the programmer has to identify regions of the code that are amenable to GPU acceleration, which is great for SIMD [single instruction, multiple data] computing but not so great for general purpose computing. And the programmer could run all of the code on the GPU, but that might actually cause slow-downs," Tzelnic said.

There are other bottlenecks as well, such as the I/O stack software and the physical drives, which will likely be converted over to flash for the next supercomputer. Combining all those technologies and building a bigger machine will lead to the next step on the speed ladder. The next supercomputer scheduled to be built at Oak Ridge will be named Trinity, and Grider expects it to be about five times bigger than Titan and perform in the 50- to 100-petaflop range once all the advantages of streamlined software and bottleneck elimination are worked out.

Moving forward after that, especially into exaflop computing, will most likely require something totally new, because the CPU/GPU designs being used today, even with full optimization and increasing the number of cores, can only go so far. So the government is funding research in key areas, with the hope that innovations,will surface in the next five years for use inside the 2020 supercomputer.

For now, China has retaken the lead in the ongoing race toward ever-faster computing. But the path to exaflops is more of a jog than a sprint. Some of the real advantages ahead could be answers to questions such as climate change, gene therapy and disease research, which can't be done today even with our fastest machines. Faster supercomputers may provide the answers we seek, but we'll have to wait a bit longer.