Ranking the world's best big data supercomputers

Connecting state and local government leaders

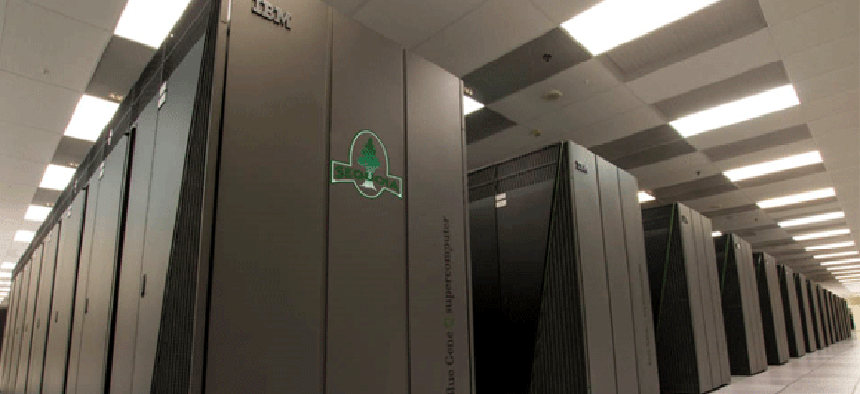

The Graph 500 ranks HPC systems not by petaflops, but on how well they handle data-intensive workloads. Livermore's Sequoia, an IBM Blue Gene Q system, leads the pack.

There’s more to measuring a supercomputer than just going by its raw floating-point processing power, especially as agencies find more uses for them.

One of the emerging uses for high-performance computing (HPC) is in analyzing big data, a process different from the 3D modeling and simulations supercomputers have traditionally been used for. Which supercomputer architectures are best for analyzing data-intensive loads? That’s the idea behind the Graph 500, a project announced three years ago at the International Supercomputing Conference, which this month released its latest rankings.

At the top of the list, as it has been since November 2011, is Lawrence Livermore National Labs’ Sequoia, a 20 petaflop machine not lacking in raw power — it currently ranks third on the Top 500 list of the world’s fastest supercomputers, and was first a year ago — but with a focus on performing analytic calculations on vast stores of data.

The Graph 500 serves as a complement to the Top 500, which uses the Linpak benchmark to measure a computer’s capacity to execute floating-point operations, the mathematical calculations used in 3D physics modeling for things such as simulating hurricanes, the Big Bang or nuclear tests. (China’s Tianhe-2, recently named the fastest computer, achieved 33.9 petaflops, or 33.9 quadrillion floating point operations per second.)

The Graph 500, as the name suggests, instead measures how fast a computer handles the graph-type problems commonly used in cybersecurity, medical informatics and other data-intensive applications, LLNL said in an announcement. By way of explanation, LLNL compared a graph of vertices and edges to a graphical image of Facebook (and its billion users), in which each vertex represents a user and each edge represents a connection between users. The Graph 500 employs an enormous data set to measure how fast a machine can start at one vertex and discover all the others. For the record, Sequoia achieved 15,363 GTEPS, for giga (billions) traversed edges per second.

Sequoia is an IBM Blue Gene Q system, a type well-suited to data-intensive tasks. Blue Gene Q systems take up nine of the top 11 spots on the list, including the second-place Mira at Argonne National Laboratory.

"The Graph 500 provides an additional measure of supercomputing performance, a benchmark of growing importance to the high-performance computing community," said Jim Brase, deputy associate director for big data in LLNL’s Computation Directorate. "Sequoia's top Graph 500 ranking reflects the IBM Blue Gene/Q system's capabilities. Using this extraordinary platform Livermore and IBM computer scientists are pushing the boundaries of the data-intensive computing critical to our national security missions."

The list, along with the Green Graph 500, which rates supercomputers’ power efficiency (another growing HPC trend), is managed by international steering committee of more than 50 experts from national labs, academia and industry.

Considering the move toward big data analytics for everything from health care to controversial anti-terrorism monitoring, the importance of the Graph 500 — and the approach to supercomputing architectures and software it represents — is just getting starting to be recognized.

In fact, despite its name, when the first list appeared in November 2010, there were only eight machines on it. That number has grown to 142 with the latest list. But it probably won’t be long until the Graph 500 name can be taken literally.