Virtualization breaks a high-performance computing barrier

Connecting state and local government leaders

A Defense Department research team introduces virtualization into the world of scientific simulations, leading to efficiency and cost savings in high-performance computing.

Virtualization is all the rage in most places, even for large organizations like federal data centers. However, for all the advantages virtualization can bring, there is one piece of the computing arena the technology has not been able to crack, until now: high-performance computing and simulation environments.

The processing requirements of most scientific computing call for hefty hardware blocks with lots of CPUs and GPUs in massive racks supported by complicated cooling schemes. Computing tasks are carefully loaded into those systems, and competition for computing cycles can be both intense and political. Simulation applications have operating system requirements that are not often compatible with virtualized environments.

Almost nowhere are all the forces against virtualization at work quite so intensely than at the Johns Hopkins University Applied Physics Laboratory Air and Missile Defense Department's Combat Systems Development Facility.

Edmond DeMattia, a senior system engineer and virtualization architect at the facility, described its configuration, which is typical of many government simulation laboratories.

"We had two stovepipe systems with one running Windows and one running Linux," he said. "There are 1,500 cores per cluster, and everyone was sharing that computer using grid scheduling."

The tasks required of the system are intense. DeMattia explained that most simulations use the Monte Carlo method, a class of algorithms where repeated random sampling is inserted into equations to obtain concrete numerical results. That means that simulations need to be run many thousands of times in most cases.

"Some simulations take five seconds per task, and we run that same task up to a million times," DeMattia said. "While others may take 15 hours per task, but are only run 1,000 times."

The problem facing DeMattia and the lab is that while the computing requirements are intense, they are not used all the time. This is further complicated by working in different operating systems, a necessity as some of the simulation jobs come from outside sources, and the lab has to accept their programming requirements.

However, it also means that in many instances, either the Windows or Linux stacks might be maxed out while the other was idle. To keep up with such demand or to expand capacity, most labs purchase more computers.

This leads to wasted resources when cycles aren't being used, even as more power, cooling and physical space are being called for. Faced with the situation, DeMattia believed there had to be a way to tap into those idle computing cycles, but it simply wouldn’t be possible using traditional computing methods.

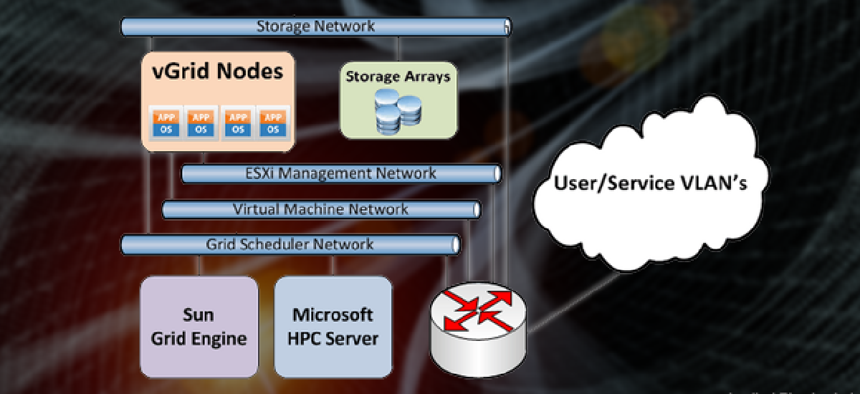

To tackle the problem, DeMattia began to experiment with ESXi from VMware, a bare-metal hypervisor based on the VMkernel operating system that manages virtual machines running on top of it.

ESXi acts as the one part of the computing grid that knows its maximum limits. In using the tool, various operating systems don’t know the others exist. However, they operate as if they have double the computing nodes as they can tap into nodes normally reserved for another OS when they are available. The ESXi layer manages the entire system.

Turning a high-performance computing environment into a virtualized one was new territory for DeMattia. But with the added layer, he wasn't really expecting too much.

In fact, in doing the initial experiments, he was trying to figure out how much performance loss would be acceptable in the new system, offset by the gains of opening up the new nodes. "I had it figured that doing it this way would result in a 6 to 8 percent loss, which would have been acceptable," DeMattia said. "But I was shocked when we measured a 2 percent gain instead."

Once the optimal virtual grid configuration was established, DeMattia's team, which included lead automation engineer Irwin Reyes and security office systems administrator Valerie Simon, removed physical nodes from the stove-piped HPC grids and simultaneously incorporated them into the vGrid architecture.

The end result was a seamless migration of independent grids into a fully virtualized environment, an end result that more than doubled the usable CPU cores for each OS platform.

"My team fundamentally redesigned how high-performance scientific computing is performed in the Air and Missile Defense Department by utilizing virtualization and distributed storage as the framework for pooling resources across multiple departments," DeMattia said.

"By leveraging the ESXi abstraction layer, multiple stove-piped high-performance computing grids are aggregated into a single 3,728 core vGrid, hosting multiple operating systems and grid scheduling engines. This has allowed our engineers to achieve decreased simulation runtimes by an order of magnitude for many studies."

It took a while for others to appreciate what DeMattia's team had done: They had created a virtualized high-performance computing environment that could reduce idle computing cycles and run more efficiently at the same time. Even with a gain of just a few percentage points in performance, it represents a big jump when pushing millions of calculations through the system. Another significant advantage is the ability to tap into every node at the same time.

Back at the Air and Missile Defense Department, vGrid led to quite a cost savings. Instead of buying new computing nodes, which can run up to $10,000 each, IT managers can tap their existing infrastructure to its fullest. This has led to an estimated $504,000 savings in hardware costs as the need to buy additional computing resources to meet peak demand was eliminated. Beyond that, it would have cost another $40,000 per year to cool and power the expansion.

DeMattia said he believes that other high-performance simulation and government labs can make use of this new technology. Even though most have shied away from trying to take high-performance computing into a virtualized space, he said they are highly complementary technologies when deployed correctly.

"I get a little embarrassed when people ask me to talk about what we did," DeMattia said. "At its core, it's really just a simple process using technology in a way other than it was designed."