Oak Ridge taps utility data for energy-use models

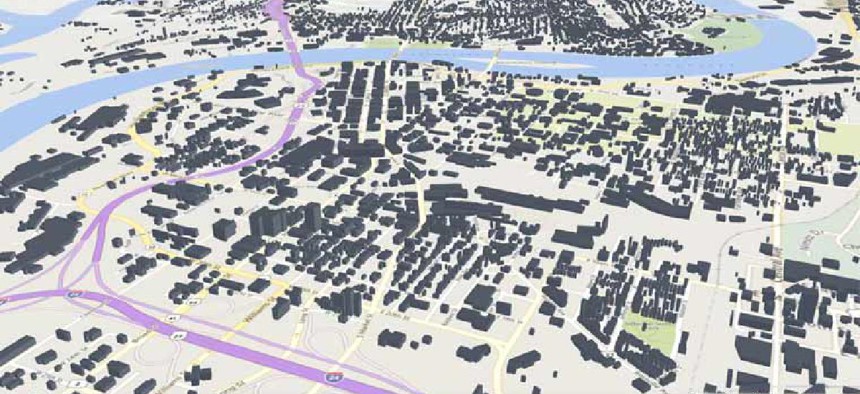

Using data from Chattanooga's power company and a decommissioned supercomputer, researchers created 170,000 building models that will help the nation reduce energy use by 30%.

In its quest to reduce American buildings’ energy consumption, the Department of Energy is using building models and simulations it made with the help of the Electric Power Board (EPB) in Chattanooga, Tenn.

Building modeling is crucial to meeting DOE's goal of cutting power use per square foot by 30% by 2030. To that end, Joshua New, subprogram manager for software, tools and models at Energy's Oak Ridge National Laboratory, is leading research to have models of the country’s 124 million buildings open and freely available by the end of 2020. “I believe we’re on track to accomplish that vision,” he said.

“How do you reach into every building in America and turn their energy use knob down a third? That’s a pretty big challenge,” New said. “If we look at the best way to convert some of the analysis we’re doing into real energy savings, utilities are the key master to our gatekeeper. They’re the ones that hold the actual measured data to individual buildings and building owners.”

In the past two and a half years, the lab has been honing a digital twin of EPB, the city of Chattanooga’s utility. That’s easier to do than it sounds because EPB is also the region's supplier of fiber-optic gigabit-speed internet and built a smart grid that accesses billions of data points. It shared with the lab the utility data it records every 15 minutes for every building in its 535-square-mile service territory. The lab then took that data and ran simulations to compare and validate the 178,368 building models it has created.

“We ran those 178,000 buildings for a year to create the baselines and then for every technology we want to apply -- bringing the insulation up to code, bringing the lighting up to code, switching out to a different HVAC system, making the HVAC system more efficient, creating a dual-fuel system so that they could swap from electricity to natural gas for heating when it’s needed to lower the electrical demand,” New said. In total, the team ran nine monetization scenarios, each lasting six and a half hours.

Simulating an average building model for a year can take anywhere from two to 10 minutes – maybe just 30 seconds, if the building is really small, he added. But to do it for 178,000-plus buildings, cost becomes a factor.

“You could potentially run it in the cloud, but it would be tens of thousands of dollars to run those simulations, and so we were lucky to have a good relationship with the Oak Ridge Leadership Computing Facility and now the Argonne Leadership Computing Facility, where we use the recently decommissioned [Cray XK7 Titan] supercomputer for most of the simulations,” he said.

The results produced more than 2 million simulations, and EPB is looking to apply some of the electricity-use adjustments it discovered to potentially save $11 million to $35 million a year. One effort that the city is pursuing is using buildings as thermal batteries -- preheating or precooling them to “coast through the hour of critical generation,” New said, which would lower the demand charge in the service area and save EPB and rate payers money on their annual energy bills. The city plans to deploy about 200 thermostats in fiscal 2020 to further empirically validate those energy and demand savings estimates.

The work with EPB was “just a unique arrangement of relationships and available data that we could get a proof of concept off the ground, but there’s interest and some initial activity in working with several other utilities to do something similar: to create a virtual utility district and figure out which technologies work best in their area,” New said.

Few, if any, utilities have the data availability that EPB does, he said, but that’s not a problem. The lab can quantify how accurate its building models are using the data it already has. “If we applied it to a different area that didn’t have that data available or didn’t have that data at high-temporal resolutions in the first place or couldn’t make it available, the hope is that we would have similar error rates for applying in their service territory,” New said, adding that utilities could simply submit their measured data when it’s available. “You don’t need measured electrical data to use this technique, but the more data you can make available, the more accurate the models will be.”

Building modeling for energy use is in vogue. “In the field of building simulation, urban-scale energy modeling is the new black,” New said. Most cities or counties are using local data such as from the tax assessor to build their models, though. By contrast, the lab is looking at the data sources available at scale and building the algorithms to process that data.

As part of their effort, New and his team looked at the cost of modeling and found that for federal performance contracts for projects costing less than $2 million, the cost of creating a good model was between 8% and 47% of the total project cost.

“That is to say, modeling today is simply manually intensive, and it’s only used in very large buildings or projects that have -- like Starbucks -- you have a small building but there are tens of thousands of them,” he said. “Our goal was to flatten that transaction cost. What if we could create a model of every building in America for free and open for use by industry?”

DOE’s Building Technologies Office set the goal of reducing energy consumption by 30% between 2010 and 2030. Currently, buildings use 40% of America’s primary energy and 75% of its electricity, although that number can hit 80% when a majority of the population is using heating or cooling systems during seasons’ extremes, according to the department.

An interactive web visualization of EBP's building data is available here.