We are ignoring the true cost of water-guzzling data centers

Connecting state and local government leaders

Regional water stress must be considered for each data center, not just in relation to the water used for on-site cooling, but also linked to the power plants that generate the electricity that power the center.

The 1960s ushered in a new age of processing digital information, driven by the intelligence needs of the cold war. Moore’s law meant microchips doubled in speed every two years, shrinking costs and miniaturizing machines that once filled entire rooms. Today, the smartphone probably being used to read this article is millions of times more powerful than the computer that landed the Apollo missions on the moon.

While those huge supercomputers have disappeared, the proliferation of the cloud and the internet of things, with everything down to our socks being able to connect to the internet, means more and more computer processors that need to communicate with data centers around the world. Even something as simple as scrolling down on this article triggers communications that may eventually pass through a distant data center.

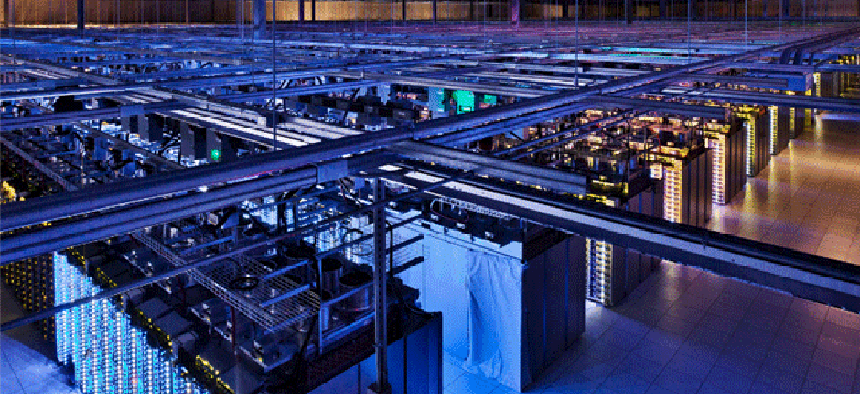

Data centers can range in size from small cabinets through to vast “hyperscale” warehouses the size of stadiums. Inside, are computers called servers which support the software, apps and websites we use every day.

As of the end of 2020, 597 hyperscale data centers were in operation (39% in the US, 10% in China, 6% Japan), up by almost 50% since 2015. Amazon, Google and Microsoft account for more than half of these and a further 219 are in various stages of planning.

Energy eaters

Data centers accounted for around 1% or 2% of global electricity demand in 2020. All that processing power generates lots of heat, so data centers must keep cool to prevent damage. While some companies are using cool air on mountain sites and Microsoft has used the cold waters of Scotland to experiment with underwater data centers, up to 43% of data center electricity in the U.S. is used for cooling.

This energy goes into cooling water which is either sprayed into air flowing past the servers, or evaporated to transfer heat away from the servers. Not only is energy required to cool the water (unless the system is specifically designed as a closed loop) but that water is lost as it evaporates. In a relatively small 1 megawatt data center (that uses enough electricity to power 1,000 houses), these traditional types of cooling would use 26 million liters of water per year.

Water directly used for cooling is what most data center operators focus on, but the largest source of water usage is actually electricity generation. This comes from how water is heated to generate steam which turns a turbine and generates electricity. Fossil fuels and nuclear power all consume water in this way, and even hydroelectric power involves some water loss from reservoirs.

The transition to renewables is therefore important to reduce both water and carbon footprints. By 2030, wind and solar energy could reduce water withdrawals related to power generation by 50% in the UK, 25% in the US, Germany and Australia, and 10% in India.

More than the daily water recommendation?

Data center water demand is more complicated than the carbon footprint. Reaching zero carbon is a reasonable goal, but zero-water is not necessarily the right choice. Consumption goals need more context.

Some data centers are in regions with abundant water, easily accessible without competing with other potential users. However, others may be built in areas of drought with degrading infrastructure.

Regional water stress must be considered for each data center, not just in relation to the water used for on-site cooling, but also linked to the power plants that generate the electricity that power the center.

For example, a recent U.S. study showed that the western U.S. has more water stress compared to the eastern U.S., and that electricity generated in the south west is more water intensive due to the use of more hydropower. Despite this, the west and south west have more data centers.

Opposition is starting to grow even as new projects are being approved. The U.S. has seen local communities protest against new data centers, which may be why in the past Google has considered its usage of water to be a trade secret. Similar concerns led to a temporary ban on new data centers in The Netherlands, and France is in the process of passing new laws to require more transparency.

We do not give water enough value

Companies are not pricing water risk into their calculations when picking locations for data centers. A lower price does not necessarily mean lower risk. When Microsoft assessed its water footprint at a data center in San Antonio, Texas, it found the true cost of water was 11 times more than it was paying.

This is similar to carbon footprints. We undervalue or ignore abatement costs associated with greenhouse emissions, and the impacts are hardly marginal. Carbon dioxide and water are inextricably linked, and climate change is already stressing drought-prone areas around the world.

The first step is transparency. Some companies like Microsoft and Facebook already publish aggregated water data, but others need to do the same. Every operator needs to publish their water efficiency plan and back it up with the relevant regional numbers.

Most data center owners have received the message about reducing their carbon footprint and the transition to renewable energy. We regularly see new projects announced with net-zero carbon goals. They now need to do something similar for water.

This article was first posted on The Conversation.

NEXT STORY: States making slow, steady progress to the cloud