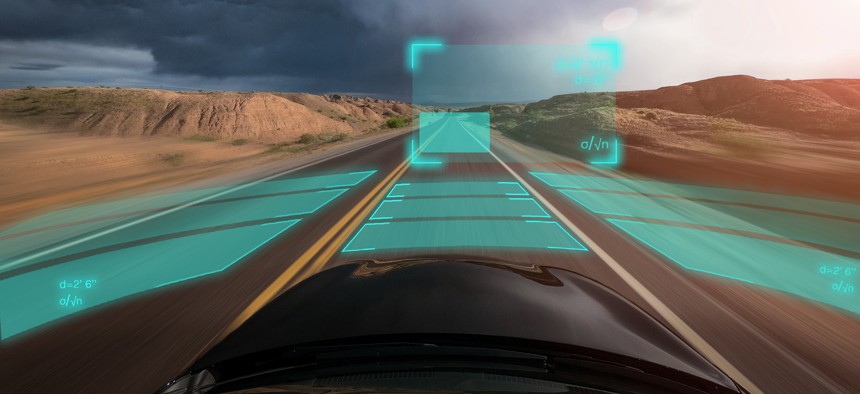

Roadside Objects Can Trick Driverless Cars

GettyImages/darekm101

Researchers found that cardboard boxes and bicycles placed on the side of the road caused vehicles to permanently stop on empty thoroughfares and intersections.

An ordinary object on the side of the road can trick driverless cars into stopping abruptly or another undesired driving behavior, report researchers.

“A box, bicycle, or traffic cone may be all that is necessary to scare a driverless vehicle into coming to a dangerous stop in the middle of the street or on a freeway off-ramp, creating a hazard for other motorists and pedestrians,” says Qi Alfred Chen, professor of computer science at the University of California, Irvine, and coauthor of a new paper on the findings.

Chen recently presented the paper at the Network and Distributed System Security Symposium in San Diego.

Vehicles can’t distinguish between objects present on the road by pure accident or those left intentionally as part of a physical denial-of-service attack, Chen says. “Both can cause erratic driving behavior.”

Driverless cars and caution

Chen and his team focused their investigation on security vulnerabilities specific to the planning module, a part of the software code that controls autonomous driving systems. This component oversees the vehicle’s decision-making processes governing when to cruise, change lanes, or slow down and stop, among other functions.

“The vehicle’s planning module is designed with an abundance of caution, logically, because you don’t want driverless vehicles rolling around, out of control,” says lead author Ziwen Wan, a PhD student in computer science. “But our testing has found that the software can err on the side of being overly conservative, and this can lead to a car becoming a traffic obstruction, or worse.”

Boxes and bicycles

For the project, the researchers designed a testing tool, dubbed PlanFuzz, which can automatically detect vulnerabilities in widely used automated driving systems. As shown in video demonstrations, the team used PlanFuzz to evaluate three different behavioral planning implementations of the open-source, industry-grade autonomous driving systems Apollo and Autoware.

The researchers found that cardboard boxes and bicycles placed on the side of the road caused vehicles to permanently stop on empty thoroughfares and intersections. In another test, autonomously driven cars, perceiving a nonexistent threat, neglected to change lanes as planned.

“Autonomous vehicles have been involved in fatal collisions, causing great financial and reputation damage for companies such as Uber and Tesla, so we can understand why manufacturers and service providers want to lean toward caution,” says Chen.

“But the overly conservative behaviors exhibited in many autonomous driving systems stand to impact the smooth flow of traffic and the movement of passengers and goods, which can also have a negative impact on businesses and road safety.”

Additional coauthors are from UCLA and UC Irvine. The National Science Foundation funded the work.

Source: UC Irvine.

This article was originally published in Futurity. It has been republished under the Attribution 4.0 International license.