Automation, crowdsourcing add up to winning algorithm

The National Institute for Occupational Safety and Health turned to crowdsourcing to help it fine-tune an automation process that accomplished in hours or a day what took staff as long as a year to complete.

To build an algorithm that would automate the traditionally manual process of coding and filing workplace injury reports in occupational and safety health surveillance systems, the National Institute for Occupational Safety and Health (NIOSH) turned to crowdsourcing.

According to Stephen Bertke, research statistician at NIOSH’s Division of Field Studies and Engineering, its first-time approach to automation was the right one for the agency, which is a component unit of the Centers for Disease Control and Prevention (CDC). NIOSH was able to quickly identify and begin using an algorithm that can accomplish in hours or a day what previously took as long as a year to complete.

That’s because the main source of information on fatal and non-fatal workplace incidents comes from unstructured free-text “injury narratives” in surveillance systems. Traditionally, employees have read these narratives and assigned standardized codes using the Bureau of Labor Statistics’ (BLS) Occupational Injury and Illness Classification System (OIICS).

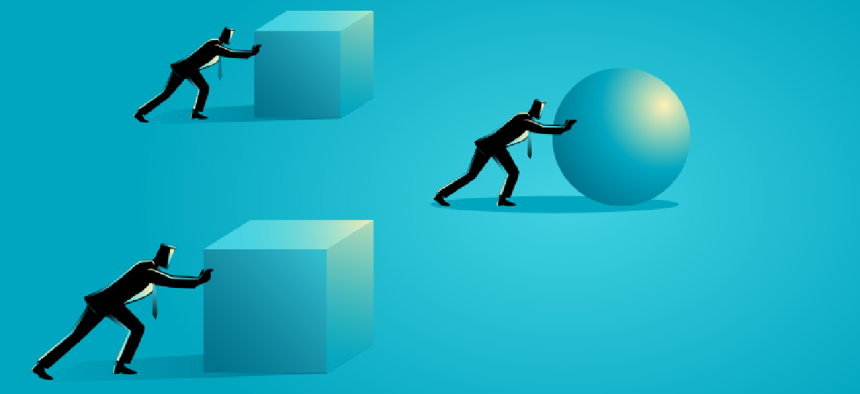

“This is completely manual. It’s a tedious task and there’s always a risk of just simple manual error, and so there’s a value then of having something that is automated,” Bertke said. “We do think that it is something that is not only a time savings, but is a more consistent and more accurate approach and really does a chunk of the work for you.”

Fine-tuning the algorithm to improve performance initially seemed a daunting task, however. A researcher looking for the right solution on their own could have easily become overwhelmed by the sheer number of tools and options available, Bertke said. BLS, the Occupational Safety and Health Administration (OSHA) and the National Academies of Science – the partners that sought to change the coding process by applying automation using artificial intelligence and machine learning -- suggested crowdsourcing.

“Instead of hiring and going out to someone … you tap into the minds of the crowd, and you end up with a product that is using the tools out there, and the crowd keeps [it] up-to-date on their own,” Bertke said. Crowdsourcing “spreads out the burden of trying all these different things. At the end, you have objectively an approach that works best on your data.”

NIOSH held two crowdsourcing competitions. The first was internal to CDC employees. Ten teams volunteered time to play with a set of 200,000 workers’ compensation claims from the National Injury Electronic Surveillance System and a test set of 25,000, Bertke said. Another 25,000 claims were held back to run the final score on. The data is from the National Injury Electronic Surveillance System, a random survey of U.S. hospitals in which medical professionals enter data on work-related injuries treated in emergency rooms.

“The data is the direct hand notes from the person administering the patient, so, again, we have a free-text field describing the condition of the patient as they’re admitted into the ER,” he said. “We want to take this database, standardize what caused the injury to get a basic report of what types of injuries are happening where, and hopefully lead to preventative strategies to prevent these injuries.”

Nine algorithms were submitted, five of which exceeded NIOSH’s baseline script, which had an accuracy of 81%. The winning algorithm performs at 87% accuracy.

The other competition was external. NIOSH teamed with NASA’s Tournament Lab vendor, Topcoder, a crowdsourcing company, to conduct it. The objective was for the company’s global community of 1.5 million technologists to develop a natural language processing algorithm that could classify occupational work-related injury records.

Posted in October 2019, the competition was open for about five weeks and attracted 388 unique registrants from 27 countries who submitted 961 unique algorithms, 46 of which increased accuracy above the baseline of 87% from the internal competition.

Participants had to use either Python or R programming languages and submit their solutions to Topcoder, which ran them on a provisional set of data and used the F1 score to measure accuracy. This means competitors received a weighted average across multiple classifications to get an overall score to compare to the baseline.

“You want to make sure the algorithm is as pure as possible, so it’s not optimized just for a particular dataset, so we run it on a final dataset,” said Topcoder CEO Michael Morris. “That is typically going to be a comprehensive, larger dataset and that determines the final score.”

Five winners were announced in January, and their code is posted on NASA’s GitHub page for public use.

“Using an approach like this can amplify the ability of a researcher,” Morris said. “Finding that particular talent in the traditional way just isn’t possible, but if you’re able to have the ‘net you can have with a model like this, you can get to that … ‘extreme value outcome,’ where you get to a point where you’re approaching a theoretical max for a problem in a reliable, predictable way.”

Bertke said he’s in the process of testing the algorithm on workers’ compensation data now to determine if any tweaks are needed. NIOSH is also working to partner with state labor bureaus that haven’t been coding workplace injuries because of the manual burden. He’s hopeful that the automation will enable them to produce surveillance reports and use the resulting data “in a real and direct and immediate way,” he said.